Intro & Multi-GPU Technology, Born In 1998

The day before I jumped on a plane from Australia to the United States to cover NVIDIA's GPU Technology Conference (GTC 2016), I did some last minute testing on some games so that I had a bunch of work I could write about (with all of the performance scores complete) while I was in the air, in the airline lounges (where I'm writing this), and in my hotel room. Well, I was doing some last minute testing on Hitman, Far Cry Primal, and The Division and ran into a slew of issues, which led to me being inspired by this scathing rage on the current state of multi-GPU support in today's games - today, being 2016.

Let's quickly recap that I'm not new to GPUs, multi-GPUs, or serious enthusiast setups. I imported one of the very first 3DFX Voodoo video cards into Australia all the way back in the mid-90s and even had Voodoo 2's in SLI - which at the time, would be like running 4 x Titan X/Fury X cards in tandem. It was insanity, but the additional performance in the games that supported both 3DFX and SLI at the time was oh so worth it.

I didn't think SLI was the future back then, as it only allowed you to jump from 800x600 to 1024x768 at the time - which was huge, in a world of 640x480. But, we're at a stage now that we need perfect multi-GPU scaling and technology, as we head into these higher and higher resolutions and most of all - VR.

I have been running SLI and Crossfire setups for the better part of 20 years now, and right now is the worst it has ever been. That sentence alone is going to have AMD and NVIDIA emailing me, as well as some of you guys and girls thinking "what the hell is Anthony smoking?" - surely, I have to be jetlagged or something, right?

Multi-GPU support in today's games SUCKS

No. Multi-GPU support in games - and let me clarify that as multi-GPU in games, not the technology itself - is not just lackluster. It fuc*ing sucks. There, I swore. That's the first time I've officially sworn on an article for TweakTown and I've written nearly 14,000 articles for the site now.

The technology itself is more amazing now than it ever has been, and that's something we'll continue on the next page. But for now, a quick rage about multi-GPU support in games. It's just not there. It really isn't, and this is because of a few reasons.

You know what? It's because developers like Ubisoft don't even provide their staff - who are developing games with budgets between $10-$100 million, with 4K monitors. Ugh, seriously.

The Technology Now, is More Than Awesome

GPUs Are Already Insanely Fast

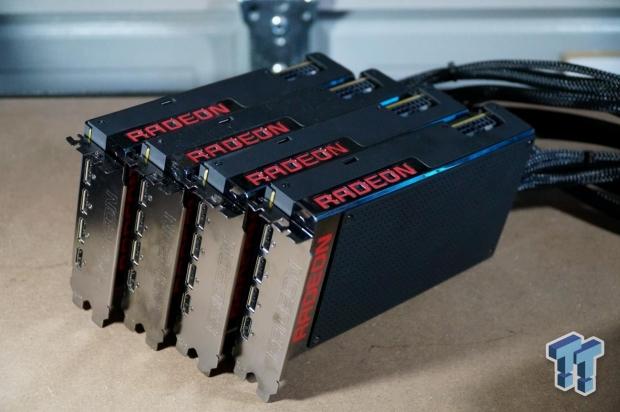

The GPU technology we have right now, without taking into consideration the next-gen GPUs that will launch in the coming months, is insane. Even the mid-range cards like the Radeon R9 290 and GeForce GTX 970 are great cards to throw into a multi-GPU setup.

Ramping up to something like the GTX 980 Ti in SLI or the R9 Fury in Crossfire, and you have a setup that can easily handle 4K gaming at 60FPS and beyond. We aren't at the crux of the problem, as we're thinking about the technology.

Thinking along the lines of 'can the technology get better?' or 'wait until next-gen' and you'd be wrong. The technology isn't the problem. NVIDIA and AMD are mostly not at fault here, and while they do sit at the table of the issue, they aren't the biggest contributing factor for why multi-GPU support in games sucks. We'll get to that in a bit. I'm not done establishing the basis for my multi-GPU gaming sucks rage-a-thon yet.

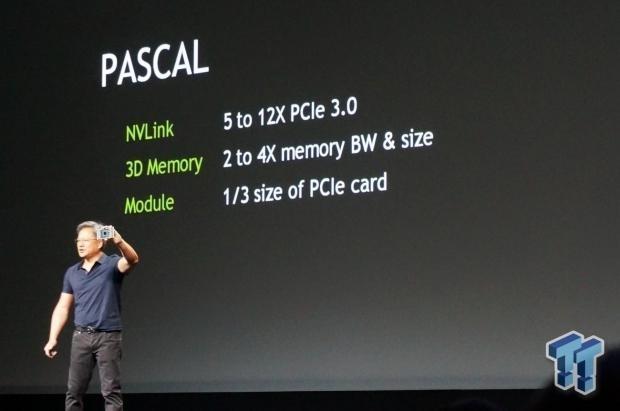

GPU Technology Is About To Go Next-Gen

So above, I said that one of the trains of thought would be to 'wait until next-gen' but that won't fix anything. If anything, it'll exacerbate the issue. We'll have infinitely faster GPUs thanks to NVIDIA's Pascal architecture and AMD's much touted Polaris architecture. But, the multi-GPU shit we put up with in games will still be there, and in fact - it'll be worse.

You would have to plonk down $1000+ for two next-gen, enthusiast video cards from either AMD or NVIDIA. When you get those two cards home (or delivered), you're going to want to put them through their paces - but how do you do that?

3DMark? Heaven? Wouldn't you want to throw your SLI/CF'd next-gen GPUs into a game like The Division, Far Cry Primal, Hitman, or Quantum Break (just to name a very select few) and enjoy unrivalled performance like the box, or marketing says? Yeah, you're shit out of luck there.

Most of the time, you're better off - and sometimes advised by the game developer, or sites like TweakTown - to take one of your video cards out, and use one for now. Do you know how much it pains me to have to write a news article, telling you to take out one of those $200, $300, $500 or, even more, expensive video cards out of your machine because there's no support for multi-GPUs in-game with a budget of $20 million, or sometimes more? Ugh.

Even Gaming Monitors Are At Their Peak

Heck, gaming monitors in 2015 totally exploded - and went right up to 2560x1440 @ 144Hz as the new "enthusiast/professional" gaming standard - while ASUS pushed boundaries by releasing their PG279Q monitor with a 2560x1440 native resolution and insane 165Hz refresh rate, all with NVIDIA's G-Sync technology on top.

For UltraWide fans like myself, the Acer Predator X34 became my gaming monitor of choice - with its beautiful native resolution of 3440xx1440. But it was the 100Hz refresh rate that got me, alongside NVIDIA's excellent G-Sync technology that made it my new monitor for, well, everything - gaming, working, and everything in between.

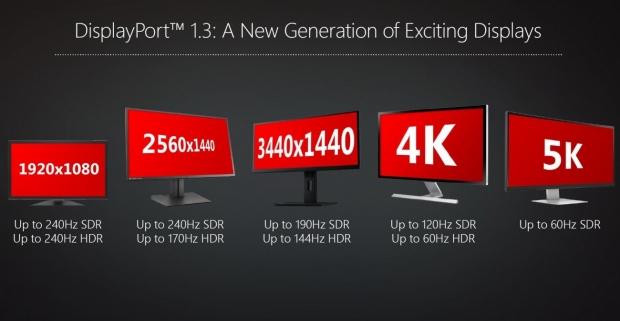

Next-Gen Displays @ 240Hz+ and VR Headsets

DisplayPort 1.4 - I'm Looking at You

One of the things I'm super pumped for this year is DisplayPort 1.4, which will allow for monitors to scale to heights that are simply impossible today - and I mean completely, technologically impossible. HDMI 2.0 and DP1.3 have hit their ceilings at 4K60, but we all want what Agent Smith yearned for in The Matrix Reloaded - more, more, more.

DP1.4 has 32.4GB/sec, and will see monitors scaling right up to 8K (7680x4320) at 60Hz, 4K at 240Hz (!!!), and even 1440p and 1080p at an insane 240Hz. Even 3440x1440 gets some DP1.4 love, with support for up to 190Hz - I know which monitor I'm waiting for this year.

But this begs the question - what the hell is going to drive 4K @ 120FPS? Right now, no single GPU solution will do it. The next-gen video cards, no matter how fast they are - simply won't be powerful enough to handle 4K @ 120FPS consistently, without heavily modifying the in-game settings.

Virtual Reality Could Be The Solution

In all reality, pun not intended, VR could be the saving grace of multi-GPU technology. Thanks to virtual reality rendering two images at once - one image per eye, multi-GPU solutions could not just benefit, but they could significantly increase performance. And by considerably, I mean close to 100% scaling with two GPUs - something we don't see much outside of synthetic benchmarks like 3DMark and Heaven.

If NVIDIA and AMD play their cards right - and they both seem to be holding pocket aces right now - VR could be the solution to my entire rage on multi-GPU performance. It gives a reason to own a second video card and receive a huge performance benefit.

It would make perfect sense for NVIDIA and AMD to do this, as VR game development is tied directly to the PC and doesn't need to worry about a huge potato box holding it back. The more power that the gaming PC in question has, the better performance, presence and experience you're going to have in your Oculus Rift or HTC Vive.

Why We Don't Need Multiple GPUs Right Now

We Need a Reason to Own Multiple GPUs; VR???

Alright, I've provided my case. Right now, multi-GPU setups suck. They're cumbersome, require too much configuration, testing, hair being pulled out - and most of all, money. Multiple video cards are super expensive, and with that money should come peace of mind - but it doesn't.

You spend more money, to spend more time, on an experience that should be better - not worse, where you're forced to disable one of your expensive video cards so the game will even work. Sure, NVIDIA and AMD sometimes have day one drivers ready for whichever game has just launched, but it's rarely perfect.

During my testing, I had countless problems trying to get SLI and Crossfire working in new games. Furthermore, I never received anywhere near 100% scaling - which is a huge problem. If you don't know what "scaling" means when it comes to multiple video cards, here's a quick lesson:

If you have one card, you'll be using 100% of your video cards available horsepower. If you add another card, you want to see another 100% being used (thus, double the performance). But, you don't - most of the time you'll receive somewhere between 20-60% scaling, which means you're getting around, and sometimes less than 1.5x performance - for having 2x the GPU power.

During my time working in IT retail, I sold custom gaming PCs for a living. I always said to consumers wanting SLI/CF rigs that they would be spending "100% more money on another video card, for 20-60% more performance" - which always had people thinking. If you're dumping another entire video card in the system, you system be getting another 100% performance - but you don't.

It's not perfect, and this isn't NVIDIA or AMD's fault - sure, they could help - but their hands are tied behind their backs, with game developers mostly at fault. Game developers - and I mean virtually everyone, you're all pathetic. You've sold your souls for consoles, and now high-end PCs are suffering - except Oculus and HTC are here to save the day with VR gaming. That last bit was a bit alarming, but this is how I feel.

Sure, not everyone has an enthusiast PC, but that's like saying that huge Hollywood movies shouldn't have the best special effects - just, cos. Alternatively, whinging because expensive cars are expensive. Enthusiasts are a huge part of the PC, and thus, they should be treated well with increased support for multi-GPU setups.

What Do Our Friends Think & Final Thoughts

What Can We Do?

What can we do? Push... push NVIDIA and AMD to improve SLI and Crossfire scaling - but we really should be running towards game developers with our pitchforks. Those (insert the worst word here) developers have sold their souls to consoles, and I really shouldn't be blaming "game developers", I should be aiming my fire at the studios.

The likes of Ubisoft, Activision, Microsoft, and everyone in between constantly bang on the drum of "we are making the PC version of X/Y/Z game something special" before it's released - and then it comes out, and it's a steaming pile of shit on the PC.

What Our Friends Think

When I started writing this article, I blasted on Twitter and tagged some of my friends in the industry - these people I respect more than they know, and will include their quick thoughts here. This in no way represents their entire opinion, but a quick glance into what I was raging about when it came to the state of multi-GPU technology.

I tagged Linus and Luke from LinusTechTips, the insanely intelligent and awesome Ryan Shrout from PC Perspective, the also super-smart Anshel Sag of Moor Insights & Strategy, and my BFF in life (sorry, wifey) Dimitry from Hardware Canucks.

Here's what Linus had to say:

Anshel replied, adding:

I totally agree with what Ryan had to say, adding that SLI/CF is like water cooling your PC, or putting your storage into RAID:

Ryan added:

Dimitry has ditched SLI/CF because of the issues I'm complaining about, tweeting:

Final Thoughts

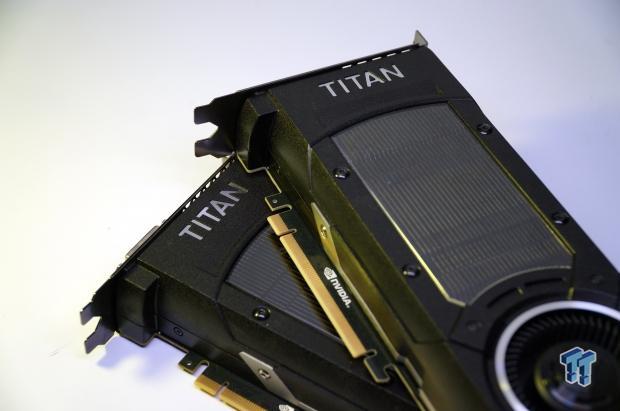

Wrapping up... I'm mad, seriously mad. Having $2000+ of video cards these days is nothing but "yeah, check out what I've got". It's kind of like having those expensive rims on your car, which do nothing for performance, but they can look great.

I've tried as much as I can not to say that NVIDIA and AMD are at fault here, but they could be doing so much more to make this issue go away. It's in their best interest to have consumers buying as many video cards as possible, but when a mid-range $200 card plays virtually every game on the market at 1080p 60FPS, why do you need a GTX 980 Ti or Fury X, or multiple of them?

Shouldn't there be a reason to own multiple video cards? When I spend 100% more money on a video card setup, I want 100% more performance - even in the real-world, 50% or more performance would be nice, but this isn't what generally happens.

Why can't we have a reboot of Crysis where it requires two high-end $500+ video cards to run at 1080p 60FPS? Something where we have to wait a couple of years before we can run it at maximum detail on a $350 card, but it would look so insanely gorgeous, that it would put the best-looking game out right now looking like an Xbox or PS2 title.

I think VR will be the saving grace of multiple GPUs as NVIDIA and AMD can tune their GPUs to render per display/eye on the HMD. This will be so beneficial for high-end PC gamers, where we'll finally have a true reason to own multiple video cards, and enjoy nearly 100% scaling.

I still want a reboot of Crysis, built on DX12 and with VR support - where we need a GTX 980 Ti or Fury X to run it at high detail at 1080p 60FPS. If you want to run 4K 60FPS, you need two next-gen GPUs in SLI or Crossfire. If it looked 2-3x better than any other game right now, it would be worth it. We need another game that makes us continually ask "can it run X/Y/Z"? Instead of "FFS, this game is a mess on day one with console-level graphics".

Please?!