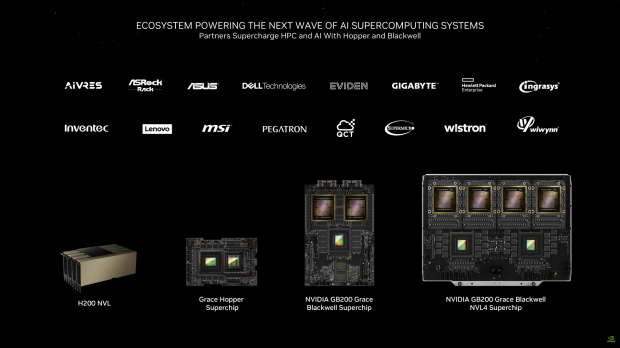

NVIDIA has announced its new GB200 NVL4, a new module that is a bigger extension to the original GB200 Grace Blackwell Superchip AI solution, doubling the CPU and GPU power while also increasing memory.

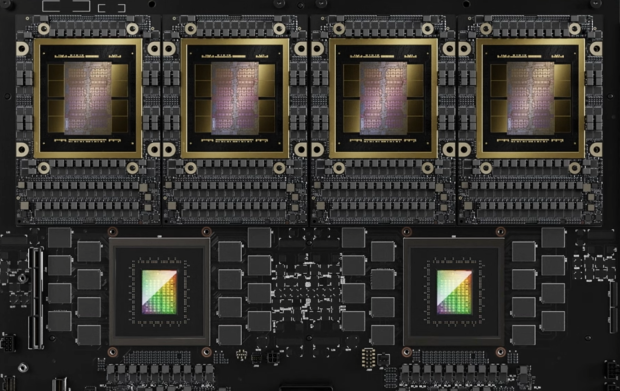

The new NVIDIA GB200 NVL4 features 2 x Blackwell GB200 GPUs configured onto a larger board with 2 x Grace CPUs, with the new module designed as a single-server solution with 4-way NVLink domain controlling a larger 1.3TB pool of coherent memory.

NVIDIA promises performance improvements over the previous-gen Hopper GH200 NVL4 with the new GB200 NVL4 module of 2.2x in simulation, and a 1.8x increase in Training and Inference performance. We know that the GB200 Grace Blackwell Superchip uses around 2700W of power, with the larger GB200 NVL4 solution to use around 6000W of power.

The new GB200 NVL4 will share 1.3TB of coherent memory that is shared across all four GB200 GPUs over NVLink, with NVIDIA saying its new fifth generation NVLink chip-to-chip interconnect enables high-speed communication between teh CPUs and GPUs of up to 1.8TB/sec of bidirectional throughput per GPU.

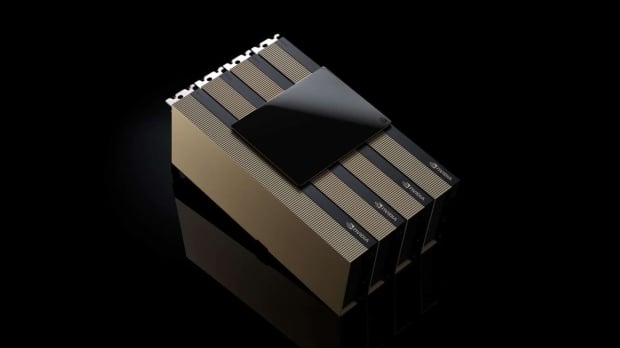

NVIDIA also unveiled an extension to its Hopper stack of products, with the introduction of the new H200 NVL which is the company's new PCIe-based Hopper cards that can connect with up to 4 GPUs through an NVLink domain, offering 7x the bandwidth than a standard PCIe solution.

The company says that its new H200 NVL solution can fit into any data center, offering a range of flexible server configurations that can be optimized for hybrid HPC and AI workloads.

NVIDIA's new H200 NVL solution has 1.5x more HBM memory, 1.7x the LLM inference performance, and 1.3x the HPC performance. There are 114 SMs in total with 14,592 CUDA cores, 456 Tensor Cores, and up to 3 TFLOPs of FP8 (FP16 accumulated) performance. There's 80GB of HBM2e memory on a 5120-bit memory interface and up to 350W TDP.