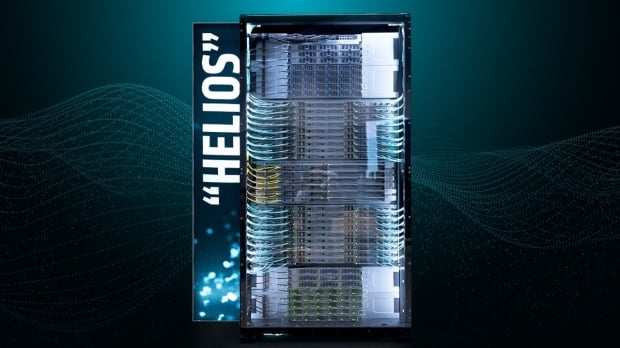

AMD has showcased its next-gen Helios rack-scale AI solution, teaming with Meta and the Open Compute Project community for the most advanced rack-scale reference system from AMD.

AMD's next-gen Helios AI rack is powered by the AMD CDNA architecture, with next-gen Instinct MI450 series GPUs rocking up to 432GB of next-gen HBM4 memory, and up to 19.6TB/sec of memory bandwidth. Inside, AMD's new Helios AI rack will house 72 x MI450 series AI GPUs that delivers up to 1.4 exaFLOPS of FP8 and 2.9 exaFLOPS of FP4 performance, with 31 TB of total HBM4 memory and 1.4 PB/s of aggregate bandwidth - a generational leap that enables trillion parameter training and large scale AI inference.

Helios also sports up to 260TB/sec of scale-up interconnect bandwidth, backed by 43TB/sec of Ethernet-based scale-out bandwidth, making sure that there is seamless communication between GPUs, nodes, and racks. AMD says that its next-gen Helios AI system delivers up to an incredible 17.9x higher performance over previous-gen racks, and 50% more memory capacity and bandwidth than NVIDIA's next-gen Vera Rubin AI system.

Forrest Norrod, executive vice president and general manager, Data Center Solutions Group, explains: "With 'Helios,' we're turning open standards into real, deployable systems - combining AMD Instinct GPUs, EPYC CPUs, and open fabrics to give the industry a flexible, high-performance platform built for the next generation of AI workloads".

- Read more: AMD's next-gen Instinct MI400 GPU: 432GB of HBM4 @ 19.6TB/sec ready for 2026

- Read more: AMD teases Instinct AI GPU roadmap: MI350 in mid-2025, next-gen MI400 in 2026

- Read more: AMD confirms next-gen EPYC 'Venice' Zen 6 CPUs are first HPC chip made on TSMC's new N2 process

Purpose-Built for Modern Data Center Realities

AI data centers are evolving rapidly, demanding architectures that deliver greater performance, efficiency, and serviceability at scale. "Helios" is purpose-built to meet these needs with innovations that simplify deployment, improve manageability, and sustain performance in dense AI environments.

- Higher scale-out throughput and HBM bandwidth compared to previous generations enable faster model training and inference.

- Double-wide layout reduces weight density and improves serviceability.

- Standards-based Ethernet scale-out ensures multipath resiliency and seamless interoperability.

- Backside quick-disconnect liquid cooling provides sustained, efficient thermal performance at high density.

Together, these features make the AMD "Helios" Rack a deployable, production-ready system for customers scaling to exascale AI - delivering breakthrough performance with operational efficiency and sustainability.