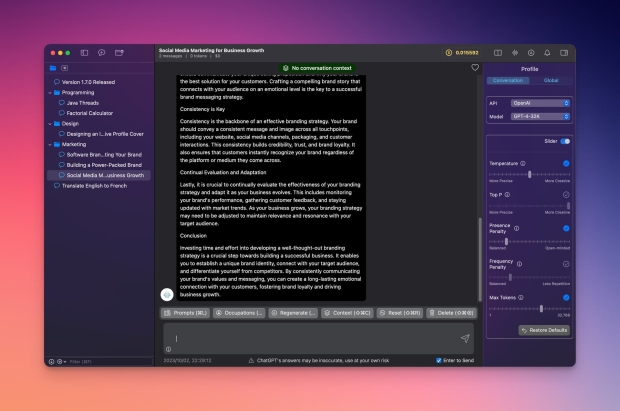

OpenAI's ChatGPT was discovered to have a security flaw that made it extremely easy to find your chats with the AI-powered tool on your device. The exploit enabled a user to read the chats as they were in plain text.

The security exploit was demonstrated by Pedro José Pereira Vieito on Threads, and replicated by The Verge, and it appears it was up until Friday last week that ChatGPT for Mac OS saved chat logs with the AI-powered tool right after they were sent. The saving of the chatlogs made it extremely easy for them to be accessed by another app that was made by Pereira Vieito. The problem with this exploit?

If a user is having private conversations with ChatGPT and within those conversations is sensitive information, such as finances, passwords, etc, a bad actor would have an easily accessible way of tracking/saving all of those conversations if it had access to the computer. OpenAI was alerted about this issue, and the AI company rolled out an update that fixed the exploit, saying its new update has encrypted the conversations.

"I was curious about why [OpenAI] opted out of using the app sandbox protections and ended up checking where they stored the app data," he said.

"We are aware of this issue and have shipped a new version of the application which encrypts these conversations," OpenAI spokesperson Taya Christianson says in a statement to The Verge. "We're committed to providing a helpful user experience while maintaining our high-security standards as our technology evolves."

![Photo of the $10 -PlayStation Store Gift Card [Digital Code]](https://m.media-amazon.com/images/I/41MT2s0Gm2L._SL160_.jpg)